A multi-disciplinary group project on simulation to drone transfer learning.

About The Project

As part of the professional awareness module in level 5, I was tasked with working in a multi-disciplinary team for a group project called Drone-Ing on. The goal of the project was to create a simulation of a drone in 3D space based on a room in the university and get it to travel between 2 points with the idea of transfer learning. From the base learning, it is hoped that it can avoid obstacles and detect various objects using it’s camera. Once the project was in a demonstratable state, the plan is to hand it over to the university as they plan on using it as a basis to formulate a research paper, of which I would like to contribute on.

Tracking Script

To be able to use the learning from the unity environment, the objects position needed to be tracked. The script was programmed using C# and the SQLite .NET package, storing the positions every second into a local database.

Training in Unity

The initial training was done in Unity where NavMesh and NavMeshAgents were used to path-find between 2 points, avoiding pillars in the room. The script tracked the x and y position, with optional z, and updated the database with the tracking script attached to the agent.

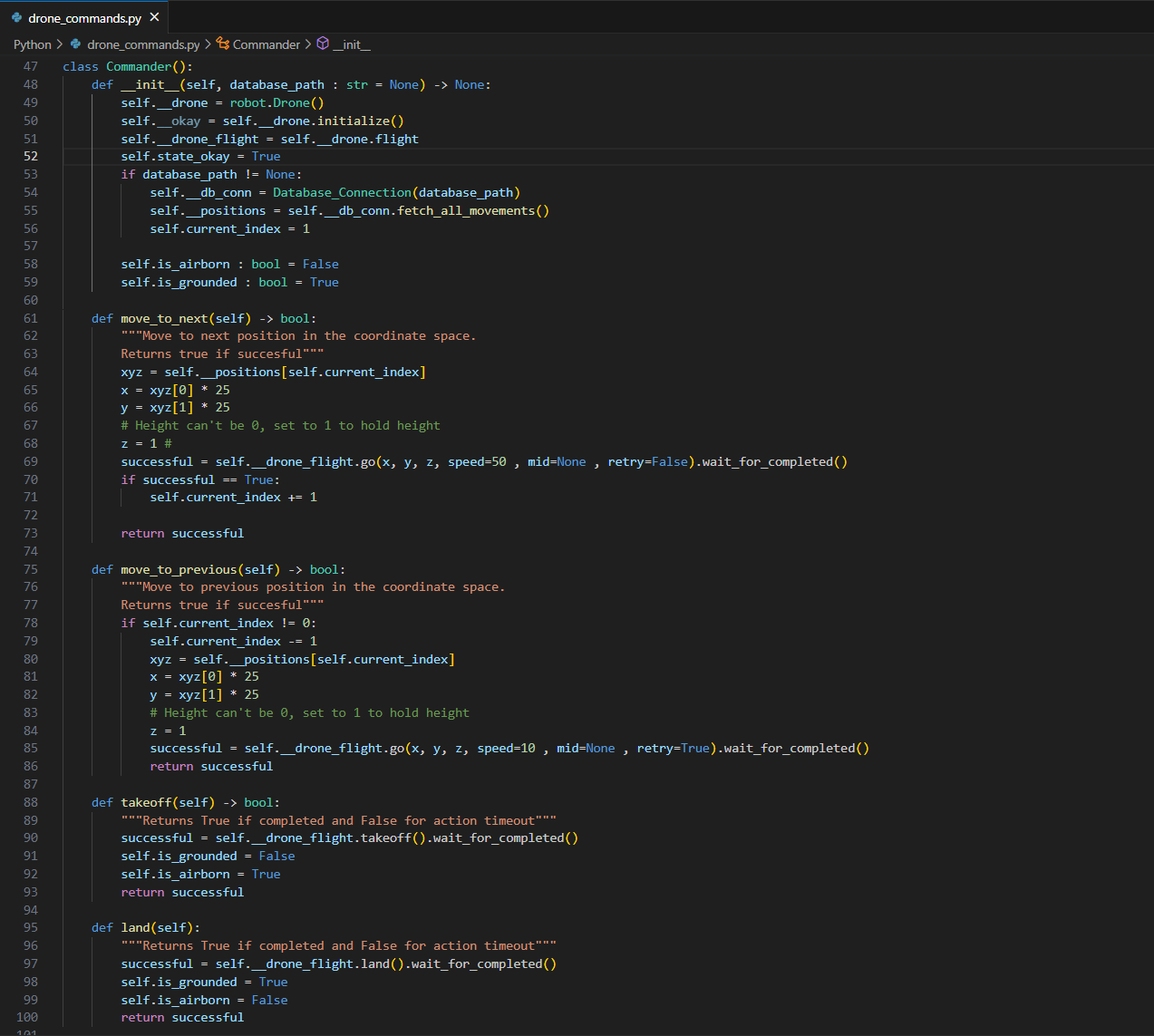

Building the flight controller

The drone used was a DJI educational-series drone. A python script containing a commander class uses the drones API to control the drone. The class has functions to allow the drone to move to the next position or previous in the database, take-off, and land. Should an error occur, and connection is lost, the drone will always land in place.

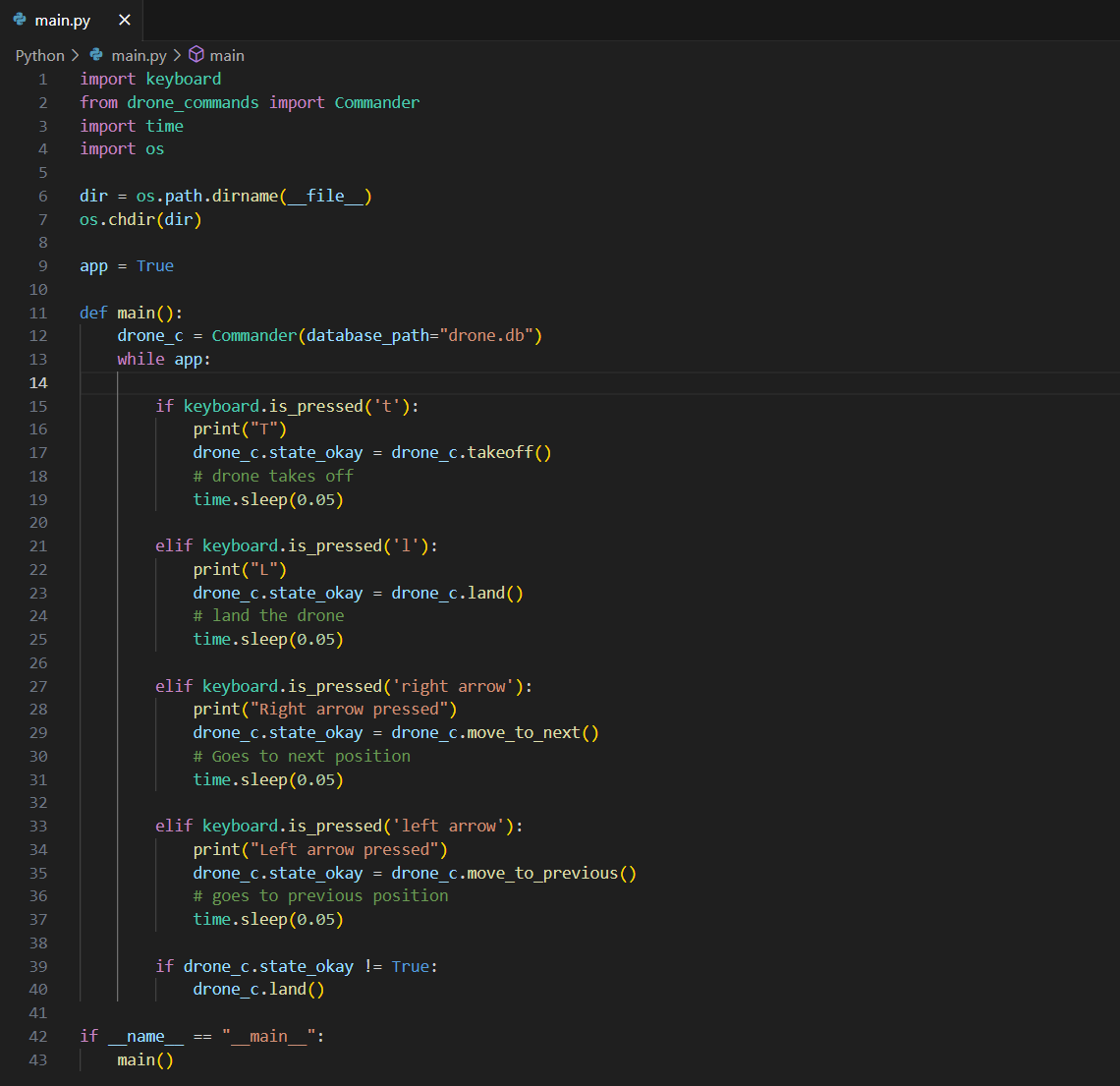

Control Loop

The class takes all positions from the database and allows the user to tell the drone to move to the next position or previous using the arrow keys. It was entirely possible to allow the drone to move of its own accord, but an element of control needed to be always maintained until obstacle avoidance and detection measures were implemented.

Testing the initial learning

During a test, the drone was able to move from one end of the room to the other but did require some manual adjustments in the data. The next step from this is to refine the shape and size of the room model and undergo some more pathfinding and store the data.

Where to from here?

Once we are confident that the sizes of the drone and room model are accurate, then there may be considerations on allowing the drone to be fully autonomous with a manual override.

View Project Files